Review sản phẩm

Bắt đầu với AI: Khi PC chơi game trở thành công cụ học tập

Bắt đầu với AI: Khi PC chơi game trở thành công cụ học tập

Với sự trỗi dậy của lập trình tăng cường AI, việc trang bị cho con em mình một nền tảng tính toán và đồ họa mạnh mẽ hơn đang trở nên cần thiết. Đúng vậy, có những lợi ích như chơi các trò chơi mới nhất, nhưng một lợi ích nổi bật đang nổi lên là khả năng triển khai các công cụ AI tiên tiến và các mô hình ngôn ngữ lớn (LLM) cục bộ trên hệ thống. Điều này tạo ra sự khác biệt rõ rệt so với các nền tảng nhập môn…

(Bài báo tiếp tục ở đây. Phần còn thiếu trong đoạn trích gốc cần được bổ sung để hoàn thành bài báo.) Ví dụ, phần tiếp theo có thể thảo luận về:

- So sánh giữa PC chơi game và máy tính thông thường trong việc chạy các ứng dụng AI: Nêu rõ những lợi thế về tốc độ xử lý, khả năng xử lý đồ họa, bộ nhớ RAM và dung lượng lưu trữ của PC chơi game.

- Các công cụ AI và LLM cụ thể có thể chạy trên PC chơi game: Đưa ra ví dụ minh họa, chẳng hạn như các framework học máy phổ biến, các mô hình ngôn ngữ nhỏ gọn, hoặc các dự án AI thú vị dành cho người mới bắt đầu.

- Chi phí và khả năng tiếp cận: Thảo luận về các lựa chọn về cấu hình PC chơi game phù hợp với ngân sách khác nhau và khả năng tiếp cận của người dùng.

- Những rủi ro và thách thức: Nhấn mạnh sự cần thiết của kiến thức cơ bản về lập trình và AI, cũng như các vấn đề về bảo mật và an toàn thông tin.

- Lợi ích giáo dục lâu dài: Làm nổi bật tác động tích cực của việc học AI đối với phát triển kỹ năng tư duy logic, giải quyết vấn đề và khả năng sáng tạo của trẻ em.

Sau khi hoàn thiện bài báo, hãy thêm các hashtag phù hợp, ví dụ:

#AI #ArtificialIntelligence #GamingPC #Education #STEM #Kids #Programming #MachineLearning #LLM #LocalLLM #TechForKids #FutureofEducation #AIforKids #Computing

(Lưu ý: Đây chỉ là phần mở rộng đề xuất. Để viết lại bài báo hoàn chỉnh, cần có đầy đủ nội dung của bài báo gốc.)

With AI-enhanced programming on the rise, there is a case for giving your kids a more powerful compute and graphics platform.<span id="more-142170" />

With AI-enhanced programming on the rise, there is a case for giving your kids a more powerful compute and graphics platform. Yes, there are benefits, such as playing the latest games, but an emerging benefit is the ability to roll out advanced AI tools and local LLMs locally on the system. This starkly contrasts the entry platforms many usually start on, which are limited to web browsing or productivity software only. In this article, we explore how a gaming PC—packed with a high-end GPU and fast storage—can serve as both an elite gaming setup and an efficient platform for learning to code with AI.

The idea of a gaming system turned AI workstation isn’t new; we approached part of this topic in an article last year covering the differences between the Dell Alienware R16 and Dell Precision 5860. While that article focused on the performance differences between consumer and workstation-grade GPUs and drivers across a wide range of workloads, this article will focus on why a gaming system can add value for someone learning with AI. The tools leveraging AI aren’t slowing down either, with many of the announcements around the new NVIDIA 50-series GPUs centering around it.

If you have a child currently in a K-12 school, the supplied system is generally going to be a really basic Chromebook. These platforms have advantages from a cost, serviceability, and access to technology standpoint, but they don’t work great for advanced use cases. Enter the home gaming PC, which can offer countless hours of gaming fun but comes equipped with some of the most cost-effective hardware for AI development work.

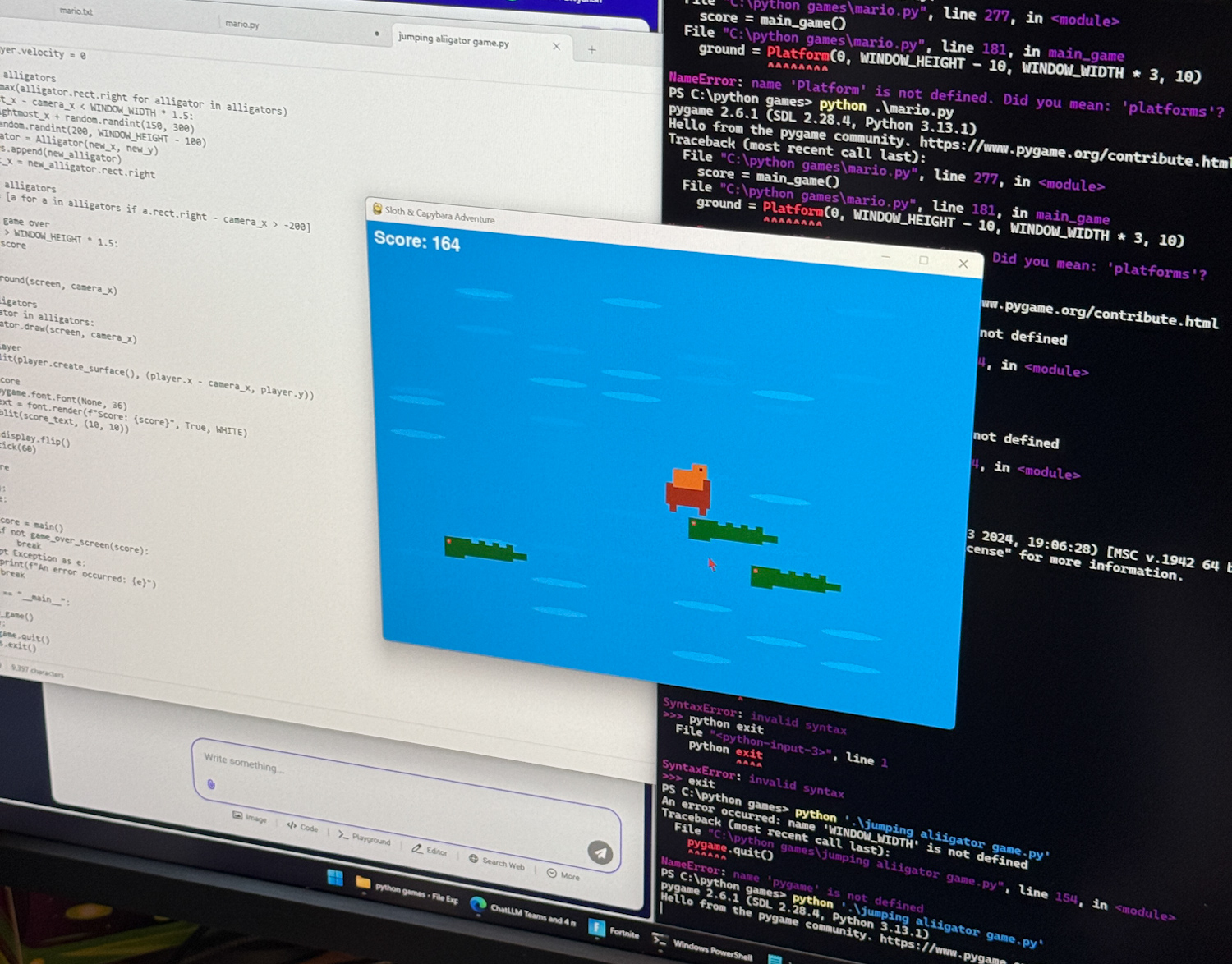

This started with my 11-year-old asked if he could use AI to make a video game. With some help, I introduced him to Abacus.AI and showed him how to craft a text prompt to write Python code, install Python on Windows, and execute the games he was designing. This was in the span of 15 minutes. He had no prior programming experience, and I think this was the first time I had installed a Python environment on Windows. It was pretty amazing to witness firsthand.

They were on a roll with different game ideas they wanted to try. Text prompt games were first, such as rock paper scissors, but that evolved to a platform game with a GUI. The first version was a small red block that could bounce as the game started and needed some physics help. It quickly evolved into a sloth jumping across platforms.

The final version of that game turned into a Sloth and Capybara adventure game of characters jumping over water filled with alligators. It was a surreal experience to witness, but it really drives home the point that children can do amazing things with the right tools in front of them.

In this article we will explore a few areas of AI that can easily be explored in a home environment, for both younger and older audiences. The easiest to start that doesn’t require any specialized hardware is a cloud-based AI offering such as Abacus AI, OpenAI and many others. These offer a wide range of text, image, video and countless other models.

Cloud-Based AI Solutions

Cloud-based AI solutions have revolutionized how we interact and learn from artificial intelligence. These platforms provide access to cutting-edge models without requiring users to invest in expensive hardware—popular options like ChatGPT and Anthropic’s Claude. However, one platform that stands out for its versatility and value is Abacus.ai.

For example, CodeLLM allows users to code directly in an online IDE while interacting with a chatbot for real-time assistance. This feature is perfect for beginners learning to code or for experienced developers looking to prototype quickly. The Code Playground feature takes it a step further by enabling users to execute their code directly in the browser, eliminating the need for local setup. This is particularly useful for creating interactive animations.

Abacus also includes abilities like Deep Research, which integrates AI into research workflows, and an AI Engineer that can automatically create bots for specific tasks. These features make it easy to get started with AI, whether you’re exploring coding, generating images, or building interactive applications. For parents or educators, this means that even a basic laptop or Chromebook can become a powerful learning ability when paired with a cloud-based solution like Abacus.AI.

Local AI

A natural question arises: if cloud-based solutions are so cheap, accessible, and easy to use, why even bother with local AI? The answer lies in the unique advantages that local AI offers, which can make it a compelling choice for specific users, especially those with high-end gaming PCs or a desire for more control over their AI workflows.

The answer lies in privacy, control, and accessibility. Running AI models locally ensures your data stays on your machine, offering unmatched privacy, ideal for sensitive projects or personal use. It also provides offline access, making it reliable in areas with poor connectivity or during server outages. For heavy users, local AI can be more cost-effective in the long run, as there are no recurring fees for usage once the hardware is set up. Local AI also offers freedom and flexibility. You can customize and fine-tune models, experiment with open-source options, and even train your own models. Additionally a hands-on approach will also help build a valuable technical skill.

Hardware Requirements

Running local AI will have some hardware challenges, which is why the topic of repurposing a gaming system for it makes sense. While some local AI suites can leverage a CPU, which we discuss below, almost all prefer a GPU, specifically NVIDIA. Right now, NVIDIA GPUs are the most popular, with VRAM being a gating factor. Taking the 40-series NVIDIA GeForce lineup as an example, we break down how much VRAM each card has:

- NVIDIA GeForce RTX 4050 (8GB VRAM)

- NVIDIA GeForce RTX 4060 (12GB VRAM)

- NVIDIA GeForce RTX 4070 (16GB VRAM)

- NVIDIA GeForce RTX 4080 (20GB VRAM)

- NVIDIA GeForce RTX 4090 (24GB VRAM)

Generally speaking, as you increase the model size or increase the precision of the model, the VRAM requirements increase. Here is a breakdown of the DeepSeek R1 models ranging from 1.5B to 70B in size and FP4 to FP8 precision levels. You can quickly make out that most of the consumer GPUs will be limited to smaller model sizes. The VRAM footprint will also fluctuate depending on what you are doing with the model, so you need some headroom too.

| DeepSeek R1 Model Size | Inference VRAM (FP8) | Inference VRAM (FP4) |

|---|---|---|

| 1.5B | ~1.5 GB | ~0.75 GB |

| 7B | ~7 GB | ~3.5 GB |

| 8B | ~8 GB | ~4 GB |

| 14B | ~14 GB | ~7 GB |

| 32B | ~32 GB | ~16 GB |

| 70B | ~70 GB | ~35 GB |

Running DeepSeek R1 or Llama 3.1 locally with Ollama

One of the more straightforward methods to deploy a local LLMs is with Ollama. Ollama is designed with user-friendliness in mind, making it accessible even for those not deeply technical. Its interface simplifies the process of downloading, managing, and interacting with large language models (LLMs). On Windows, installing Ollama is straightforward. Head over to the Ollama website, click download (choose your OS), and then run that setup file.

Once installed, Ollama’s command-line interface (CLI) allows users to easily pull and run models with simple commands, such as ollama pull and ollama run . This can be accessed by clicking the Windows Start button, typing “cmd” and loading your command prompt. Below is an example showing models already downloaded on the system, kicking off DeepSeek R1 14B and writing a story about a sloth building a house.

Beyond the CLI, Ollama also offers Ollama Hub, a web-based interface that provides a user experience similar to cloud AI solutions, making it accessible even for those who prefer a graphical interface.

What makes Ollama particularly appealing is its extensive community support and rapid development cycle. There is also the advantage that installing it takes a few seconds, and getting someone up to speed on downloading or running models is just as quick. The most prolonged delay for most users will be their internet speed, as many of these models are many GBs in size.

It’s important to note that if you intend to run a local LLM, each model will have different system requirements, with a GPU highly preferred to run things efficiently. In the system resource shot above, Ollama runs the DeepSeek R1 14B model, which uses just under 11GB of VRAM. While the model is loaded, the GPU usable sits idle, but as soon as you start interacting with it, the usage will spike.

Running LLMs on Lower-End Hardware: Quantized Models

For users with GPUs with lower VRAM, quantized models offer a practical solution. These are essentially compressed versions of LLMs that reduce the memory requirements, allowing them to run on less powerful GPUs. While quantization comes at the cost of some performance and accuracy, it makes running advanced models more accessible to a wider range of hardware.

It’s also possible to run LLMs on CPUs, though this comes with a further performance trade-off. CPU-based execution is significantly slower than GPU-based processing, but it can still be a viable option for smaller models or users without access to a dedicated GPU.

LLAMA.CPP

One of the most popular abilities for running LLMs on CPUs is llama.cpp, a C++ native application designed for efficient inference of large language models. Despite its name, llama.cpp is not limited to LLaMA models. Its lightweight design and optimization for CPU usage make it an excellent choice for users who want to experiment with local AI on modest hardware. By supporting quantized models, llama.cpp further reduces the resource requirements, enabling even consumer-grade hardware to run advanced LLMs efficiently.

Stable Diffusion Image Generation with ComfyUI

For local image generation, ComfyUI is an easy way to get started right away. To get the instance running smoothly, we followed this guide on Stable Diffusion Art. The steps involve downloading the ComfyUI instance in a portable 7z archive, extracting the folder, and downloading an existing model checkpoint.

Running ComfyUI is a bit different than the Ollama LLM. You open the folder for the ComfyUI instance you downloaded and saved your checkpoint into and double click either the run_cpu file if you have a system with integrated or low-end graphics or run_nvidia_gpu if you have a stout dedicated NVIDIA graphics card.

That will then load a command prompt in the background that looks a bit complex but quickly loads a link in your default web browser for its GUI.

The GUI you will be presented with shows the workflow of the image generation model, although you can jump right in by replacing the text inside the CLIP Text Encode prompt. In this example, we generated a group of four images of a sloth playing a video game. In the Empty Latent Image field, the width and height of the images were changed from 512 to 1024 to make them bigger, as well as batch_size to 4 to make multiples at the same time.

Final Thoughts: Empowering the Next Generation with AI

The rapid evolution of AI and its growing accessibility means that today’s gaming PCs can serve a far greater purpose than just entertainment. By enabling kids through early access into AI through cloud-based offerings or local instances with capable systems, we’re giving them the tools to explore machine learning, experiment with AI-driven creativity, and develop valuable programming skills that will be increasingly relevant in the years to come.

From coding simple games to running LLMs and generating AI-powered art, a well-equipped home PC can become a robust learning environment. Whether using cloud-based AI services or diving into local deployments with tools like Ollama, ComfyUI or countless others, the opportunities for young learners to engage with AI are more abundant than ever.

Ultimately, the decision to invest in a more capable system isn’t just about upgrading hardware; it’s about fostering curiosity, creativity, and technical skills. As AI continues to shape the future, giving kids the chance to experiment hands-on with these technologies could be one of the most impactful investments in their education and development.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed

//platform.twitter.com/widgets.js//www.instagram.com/embed.js//www.tiktok.com/embed.js

Xem chi tiết và đăng kýXem chi tiết và đăng kýXem chi tiết và đăng kýXem chi tiết và đăng kýKhám phá thêm từ Phụ Kiện Đỉnh

Đăng ký để nhận các bài đăng mới nhất được gửi đến email của bạn.